Fair Lending Program Considerations: A 5‑Pillar Guide

Learn practical Fair Lending Program considerations for fintechs: a five‑pillar framework, launch checklist, and audit playbook to avoid delays and fines.

Introduction — What This Guide Covers

Compliance delays kill launches.

Fair Lending Program Considerations explain how to stop that from happening.

You’ll get a five‑pillar program, an operational checklist, an exam playbook, common mistakes with fixes, and two practical ways fractional compliance closes skill gaps without hiring full‑time staff.

Why Fair Lending Matters for Fintechs

Regulators enforce unfair lending rules aggressively. Civil money penalties, remediation orders, and reputational damage follow when patterns or poor documentation show discriminatory effects. The CFPB’s 2024 fair‑lending report highlights current examiner priorities and enforcement trends you should expect.

For product teams, gaps look like delayed releases and rewrites. One missed disclosure or an uncontrolled experiment can push a feature launch from weeks to months. Small fintechs aren’t immune: both agencies and private plaintiffs pursue firms when data shows problematic outcomes.

Agency posture is shifting across regulators, which changes examiner focus and evidence expectations. Track notices like the OCC bulletin on disparate impact to understand multi‑agency nuance. Public datasets such as HMDA remain core benchmarking tools during exams.

Product, engineering, legal, compliance, and executives must share ownership. Without that, decisions stall. Engineering reworks features to "pass" unknown rules.

One short example: a payments feature was flagged mid‑release because experiment logging missed a critical flag. The fix cost two sprints and delayed the release. That’s avoidable.

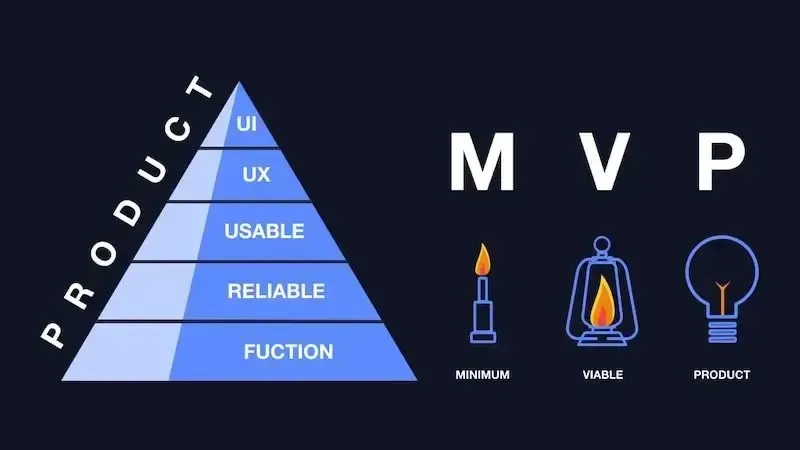

Five Pillars of a Repeatable Fair Lending Program

Treat fair lending as a living program. These five pillars are practical controls you can assign, measure, and improve.

Pillar 1 — Inventory data and lineage

Good testing starts with good data. Inventory sources, required fields, and joins you need to reconstruct offers and denials. Validate completeness and consistency before analytics.

Log data lineage and transformation steps so an examiner can trace an offer back to raw inputs.

Example: capture model version, feature flag state, experiment ID, and reason code for every decision.

For proxying race/ethnicity, follow CFPB methodology and reuse public code.

Tip: one-line audit evidence beats a paragraph of claims. Log early. Log often.

Pillar 2 — Define policy and governance

Set clear scope, roles, and escalation paths. Codify decision thresholds and an exception approval flow. Version policies and schedule regular reviews. Map policy owners to federal and state requirements. If you run targeted credit programs, document legal basis and operational controls using industry guidance as a reference.

Small teams need a single owner for exceptions. Make that non‑optional.

Pillar 3 — Document models and product controls

Record model inputs, outputs, intended use, and limitations. Require pre‑deployment fairness checks and sensitivity tests. Implement guardrails: feature flags, kill switches, and documented manual overrides. Keep an experiment log for A/B tests affecting credit terms.

Follow agency expectations for AI explainability when models affect denials or pricing. Use NIST guidance for model governance where relevant.

Practical note: add a one‑paragraph model README to every deployed model. It saves time during an exam.

Pillar 4 — Monitor continuously and test regularly

Automate monitoring for disparate impact and subgroup performance. Define metric thresholds that trigger investigations and alert owners. Schedule back‑testing and validation windows. Integrate these dashboards into the same tools product and operations use. Use HMDA to benchmark public patterns when applicable. Consult industry fairness metrics for practical thresholds.

Small teams: start with one metric (selection rate) and expand.

Pillar 5 — Standardize messaging and remediation

Pre‑write adverse action language and disclosure templates. Create remediation playbooks that include outreach scripts, timelines, and closure tracking. Retain proof of outreach and remediation outcomes as exam evidence. Use CFPB adverse action guidance for templates.

Rule of thumb: document the what, who, and when for every remediation.

Step-by-Step Fair Lending Program Checklist

This is your operational playbook. Each action shows the suggested owner: product (P), compliance (C), engineering (E), legal (L). Use it during launches and remediations.

Step 1 — Discovery and scoping (P/C)

- Map every path that affects terms, price, or access. Include pre‑qual, underwriting, manual review, collections.

- Catalog affected states and flag state‑specific disclosure or fee rules (L/C).

- Interview product, credit, and ops leads to surface hidden decision logic and manual exceptions. One brief interview often reveals undocumented manual overrides.

- Collect application flows, sample notices, and customer journey snapshots. Store redacted examples with version tags in a central evidence folder.

Output: a scoping memo listing products, decision owners, jurisdiction notes, and high‑risk areas.

Micro‑example: during scoping you discover a manual underwriter override that bypasses an automated credit screen. Log that override, identify the owner, and require a justification field in the event log.

Quick checklist:

- Who owns each decision path?

- Which states matter?

- Are manual overrides documented?

Step 2 — Data and instrumentation tasks (E/Data)

- Implement event tracking for every decision‑relevant attribute: applicant attributes, outcomes, score inputs, timestamps, model version, experiment ID, and decision reason codes. Do this before any live rollouts.

- Create a required fields checklist. Decide how to handle protected‑class data, proxy flags, and opt‑outs. Validate how proxies will be stored and labeled.

- Instrument logging for experiments and toggles that alter offers. Ensure logs include the feature flag state at decision time.

- Apply retention limits and strict access controls for PII. Build redaction workflows for exam sample requests.

Tooling shortcut: if your production data is thin, use public sandbox datasets to test flows and analytics.

One-sentence reminder: if it’s not logged, it didn’t happen.

Step 3 — Controls, testing, and analytics tasks (C/Data Science)

- Run pre‑launch fairness simulations on production‑like datasets. Look for disparate impact ratios and subgroup performance.

- Build a testing suite: selection‑rate ratios, subgroup ROC/AUC comparisons, false positive/negative analyses, and calibration checks. Use academic guidance for test design. Fairness Testing Primer

- Document remediation thresholds and automated alerts. Define who investigates and the SLA for closure. Require a named compliance owner sign‑off before launch.

- Keep a clear audit trail of simulation inputs, code versions, and outputs.

Mini dialogue for handoff clarity:

Product: "Tests passed in staging — ready to launch?"

Compliance: "Not yet. We need the production logs and sign‑off on thresholds."

Product: "Understood — we’ll delay the launch flag until you sign."

What to test now:

- Staging simulations with production‑like joins.

- Sensitivity to missing inputs.

- Experiment impact on protected subgroups.

Step 4 — Policies, training, and operationalization (C/P)

- Publish role‑specific SOPs and embed fair‑lending checkpoints in PRD templates and release gates. Make the compliance checkpoint non‑optional.

- Train product and engineering on what compliance artifacts they must produce. Provide short checklists for code merges that affect decisions.

- Run quarterly tabletop exercises and update playbooks after incidents. Use peer communities for quick troubleshooting.

Output: sprint rituals with explicit compliance tasks and a training completion tracker.

Practical step: add a single compliance bullet in every PRD. Make it visible in sprint planning.

Audit Readiness and Exam Playbook

Adopt an evidence‑first mindset. Examiners expect reproducible artifacts mapped to owners.

Pre‑exam inventory and evidence collection

Create a single evidence index that maps document types to owners and storage locations. Use an evidence‑index template to bootstrap this work.

Collect:

- Data dictionaries and lineage documentation (E/C).

- Model validation reports, sensitivity analyses, and back‑test results (C/E).

- Adverse action examples and templates (L/C). CFPB Adverse Action Templates

- Monitoring dashboards and alert logs for the exam window (C/E).

- Snapshots of code, feature‑flag states, and model versions for the period in question (E).

Prepare redacted sample files and maintain a chain‑of‑custody log for any shared datasets.

Checklist for the night before an exam request:

- Evidence index up to date.

- Named owners for each artifact.

- Redacted samples ready.

Simulated exam and remediation sprints

Run a timed regulator request scenario to test speed and evidence completeness. Use a simulated request playbook to structure the drill.

Simulated exam steps:

- Assign a point person for each evidence type and set 24/48/72‑hour deliverables.

- Triage missing artifacts; create focused remediation sprints for high‑risk items.

- Use a "report to regulator" template that summarizes findings, root causes, and remediation timelines.

- Rehearse a 2‑minute executive narrative explaining controls, monitoring, and risk appetite.

Hypothetical timeline: Day one: pull monitoring logs and model snapshots; Day two: deliver redacted sample files and the remediation plan; Day three: present executive narrative and closure timelines.

Small teams: run the drill quarterly. It exposes fragile evidence paths fast.

Benchmarking and continuous improvement

Score your program maturity across the five pillars and publish a remediation plan with owners and target dates. Benchmark patterns against HMDA and agency observations to identify potential examiner triggers.

Invite a short diagnostic to map multi‑state requirements and get a time/cost estimate for remediation. A diagnostic delivers prioritized tasks, ballpark timelines, and predictable pricing that senior leadership can budget against — useful near quarter‑end launches.

Track KPIs: time‑to‑investigate, remediation closure rate, percent of experiments logged, and monitoring coverage. Publish these to leadership so resourcing becomes visible and actionable.

One-line KPI to start: time‑to‑produce evidence for a regulator request.

Common Mistakes and How to Fix Them

Mistake 1 — Relying on ad hoc legal advice.

Fix: centralize ownership, require documented rationale for exceptions, and log decisions in the evidence index.

Mistake 2 — Shipping models without fairness tests.

Fix: block release until pre‑deployment simulations complete and an owner signs off.

Mistake 3 — Missing data lineage.

Fix: formalize lineage, version transforms, and require join logic documentation for analytics.

Mistake 4 — Treating fair lending as a one‑off.

Fix: integrate monitoring into sprint rituals and require quarterly validations.

Mistake 5 — Poor exam documentation.

Fix: maintain an evidence index, run simulated requests, and schedule periodic spot checks.

Mistake 6 — Understaffed compliance function.

Fix: use fractional CCO support during peaks and audits to supply senior judgment and predictable costs.

Quick example for Mistake 2: a team launched a pricing tweak without subgroup tests. Post‑launch monitoring showed a 20% selection‑rate drop for a protected subgroup. They reverted the change and added a forced staging period. That process is what you want baked into your release gates.

Two-sentence warning: Don’t assume monitoring will catch every change. Put blockers and sign‑offs in place.

Conclusion — Key Takeaways and Next Steps

Treat fair lending as repeatable work across data, policy, models, monitoring, and messaging. Evidence and reproducibility must be built into releases.

Do this this quarter:

- Run a one‑week discovery sprint.

- Instrument core decision events.

- Schedule a simulated exam.

If you need rapid senior coverage, run a short diagnostic or bring in fractional CCO help to bridge skill gaps and reduce launch risk. Start the sprint now. Save the weeks you’d otherwise lose to remediation.

FAQs

Q: What documentation do regulators expect?

A: Data dictionaries, data lineage, model validation reports, adverse action templates, experiment logs, monitoring dashboards, system snapshots, and a maintained evidence index.

Q: How often should fairness testing run?

A: Use real‑time monitoring for core metrics and run full validations quarterly. Always run pre‑deployment simulations for changes that touch pricing or access.

Q: Can small fintechs use proxies for protected classes?

A: Proxies are usable for monitoring but carry legal and statistical limits. Validate performance, document limitations, and follow CFPB guidance and code examples when you implement proxies.

Q:

How do I balance product velocity with compliance?

A: Use staged rollouts, feature flags, and accelerated post‑launch monitoring. Require compliance sign‑off for experiments that affect terms, and allow low‑risk experiments to proceed with heightened monitoring.

Q: What are reasonable remediation timelines?

A: Prioritize by risk. Critical harms — immediate mitigations and a 30–90 day remediation window. Documentation gaps — resolve across a quarter with assigned owners. Track SLA adherence in your remediation plan.

Q: When to hire a full‑time CCO versus fractional help?

A: Hire full‑time when your regulatory footprint and product complexity require continuous, dedicated leadership. Use fractional CCOs during early growth for senior judgment, predictable costs, and fast integration. For a quick benchmark and time/cost estimate, consider a short diagnostic.