AI Governance in Human Resources: A 30–90 Day Guide

AI Governance in Human Resources: A tactical 30/60/90 guide to inventory, risk assessment, policy, controls, and audit readiness so HR teams can reduce legal and operational exposure.

Introduction — Why HR Needs AI Governance

HR AI can cost you.

AI Governance in Human Resources is a core business risk.

HR teams now use AI for recruiting, performance scoring, pay decisions, and candidate chatbots. These systems touch sensitive PII and expose you to legal, reputational, and operational harm.

This guide gives a compact, practical model HR leaders can apply in 30–90 day sprints. You’ll get a five-part approach, step-by-step actions, and a compliance-mapped role checklist to make governance real for your team.

What you will do this quarter: run a 30‑day inventory, complete a 60‑day risk review, and deliver 90‑day controls and monitoring for two prioritized systems.

Five-part AI Governance Model for HR

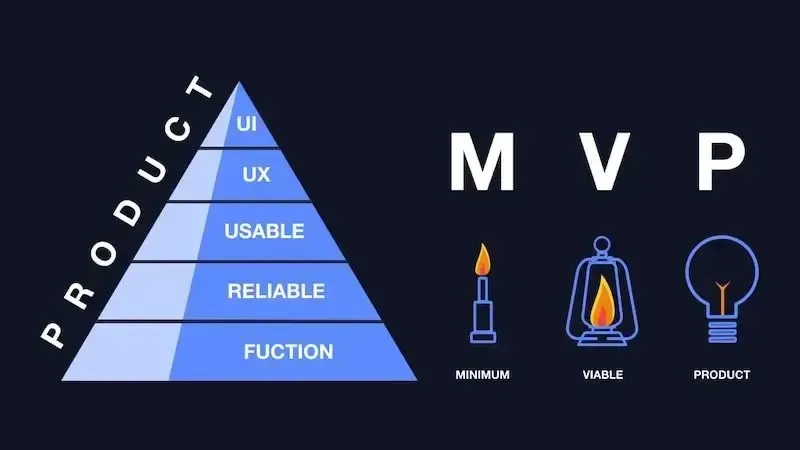

Use this five-part sequence: Scope → Risk Assessment → Policy & Accountability → Controls & Monitoring → Audit & Response. It focuses on HR realities: sensitive personal data, many vendors, and high fairness scrutiny.

This approach builds on federal and international guidance but is narrower and tactical for HR teams. For practicable tools and playbooks visit the NIST AIRC hub.

30/60/90 micro-checklist:

- 30 days: Catalog systems and owners.

- 60 days: Score and prioritize risks.

- 90 days: Deploy controls and monitoring dashboards.

Quick note: start with the system that could cost you a hire, a lawsuit, or regulator attention.

Step 1: Scope HR AI Inventory

Catalog every HR AI use: resume parsers, interview screeners, compensation models, churn predictors, internal chat assistants, and automated termination recommendations. Tag each system by data type (PII, health, salary) and decision impact (informational, decision‑support, automated decision).

Map each system to an owner: HR product owner, vendor lead, or engineering contact. Record vendor SLAs and update cadence. Capture model provenance: training data sources, model type, evaluation datasets, and known limitations.

Use a simple Google Sheet or shared template. The UK ICO toolkit has reusable assessment items you can adapt.

Prioritize systems by an impact score: PII sensitivity + decision criticality + regulatory exposure. Example scoring (0–5 each) and simple rule:

- Resume screener: PII 3 + decision criticality 4 + regulatory 4 = 11 (high)

- Internal chatbot: PII 2 + decision criticality 1 + regulatory 1 = 4 (low)

Mini-vignette: David, a COO at a payments startup, found the resume screener flagged women for lower interview scores. The team had not tracked data provenance. That one discovery moved the screener to the top of the 30‑day inventory.

Quick reader prompt: Which system could cost you a hire, a lawsuit, or regulator attention? Start there.

Step 2: Risk Assessment for HR ML Systems

Identify HR-specific risks

HR risks include bias/discrimination, privacy breaches, wrongful termination, inaccurate performance scoring, and vendor concentration. Map each to exposures under EEO/ADA, state privacy laws, and employment law.

For regulator context see EEOC guidance and enforcement trends. Recent enforcement news helps frame urgency.

"Employers must ensure automated systems do not unlawfully discriminate and must validate systems used in hiring decisions." — EEOC guidance (paraphrased)

Run a lightweight risk rating

Use a four-factor rubric: Likelihood × Impact × Detectability × Regulatory sensitivity. Score each on 1–5, multiply, and rank.

Example scorecard for an automated resume screener:

- Likelihood of bias: 4

- Impact on hires: 5

- Detectability: 2

- Regulatory sensitivity: 5

Total risk score: 4×5×2×5 = 200 (high)

Run scoring with HR, Legal, Engineering, and Data Science. Keep sessions short (60–90 minutes) and use real examples. If stakeholders disagree, surface assumptions and re-score with supporting data.

Translate risk to controls

Map each high-risk finding to specific controls: data minimization, fairness testing, human-in‑the‑loop checkpoints, logging, and appeal paths. Create a control register that links risk → control → owner → status.

Use fairness toolkits like Fairlearn for tests. For dataset provenance, use Google's Data Cards Playbook.

Practical tip: for each control add a simple success metric (e.g., disparity ratio < 1.2, override rate < 5%) so monitoring has clear thresholds.

Short, concrete example: if a screener shows a 1.4 disparity ratio for interview invites, add a mitigation control (adjust model features or require human review) and track the override rate weekly.

Step 3: Policies, Roles, and Accountability

Draft core governance policies

Write short, actionable policies: allowed AI uses in HR, data retention windows, vendor vetting, and human oversight rules. Include mandatory pre-deployment checklist items:

- Bias test passed and documented.

- Privacy review completed.

- Decision labeled: support vs. automated.

- Model card published.

Use templates and guidance like OECD AI Principles and Google’s Model Card Toolkit for documentation.

Assign compliance roles and escalation paths

Define responsibilities: AI Owner (HR), Data Steward, Model Reviewer (Data Science), Compliance Lead. Add these to your RACI and make compliance sign‑off a sprint pre-release gate.

Mini-dialogue example: HR lead: "Can we launch the new screener this week?"

Compliance (fractional CCO): "Not yet. We need the fairness report and vendor attestations. Pause the deploy until those are in."

Use fractional compliance to own accountability

If you lack senior compliance bandwidth, bring in a fractional CCO to own policy, run regulator‑ready risk assessments, and manage audit readiness. Comply IQ’s Fractional CCO Services can fill that role on-demand and integrate with HR and product teams.

Next practical step: schedule a 30‑minute compliance intake call or download a one‑page checklist to scope a fractional CCO engagement.

Note from experience: when a fractional compliance lead owned sign‑off, engineering regained three days per release previously lost to ad hoc legal reviews.

Step 4: Controls, Validation, and Monitoring

Pre-deployment validation

Require fairness, performance, and privacy tests pre-deployment. Concrete tests: demographic parity, disparate impact ratios, AUC, precision/recall, and threshold-based false positive/negative checks. Document test datasets, thresholds, and remediation actions.

Testing toolkits:

- IBM AIF360 for bias detection

- Fairlearn for mitigation

Produce model cards and datasheets to explain intended use, limitations, and evaluation by group. Use Hugging Face model cards.

Continuous monitoring in production

Monitor:

- Input distribution drift.

- Outcome drift.

- Error rates.

- Override and appeal rates.

Build dashboards and alerts with a simple stack: Prometheus for metrics + Grafana for dashboards. Set alert thresholds tied to your control register and review monthly for high‑risk systems, quarterly for medium.

Mini-dashboard example (KPIs):

- Daily input drift metric > 0.05 → alert.

- Monthly demographic parity ratio > 1.2 → investigate.

- Override rate > 5% → root‑cause review.

Short flow: detect, triage, remediate. Then document.

Vendor and supply-chain controls

Require vendor attestations: data lineage, update cadence, third‑party audit results (SOC 2), and right-to-audit clauses. Reference SOC 2 basics when drafting requests. Add contractual SLAs for model performance and change notification. Use CIS Controls for vendor risk baselines.

Practical contract language snippet to consider (paraphrase): vendors must provide update notices 30 days before model changes affecting outcomes and supply model performance data on request.

Step 5: Audit readiness and incident response

Build regulator-ready documentation

Assemble a per-system AI compliance dossier: risk assessment, validation results, monitoring logs, decision rationale, vendor artifacts, and model cards. Use NTIA guidance for disclosure items and system cards. The Australian AIA tool offers a practical template to adapt.

Store artifacts in an encrypted, indexed repository with access logs. Make the dossier exportable for regulator requests.

Prepare incident and remediation playbooks

Write an incident playbook: detection, triage owner, regulator-notification thresholds, and communication templates for employees and candidates. Run at least one tabletop exercise annually with HR, Legal, and Compliance.

Sample incident vignette:

- Detection: sudden increase in candidate appeals.

- Triage: Data Steward runs fairness test within 48 hours.

- Remediation: pause model, revert to human review, notify impacted candidates per policy.

- Reporting: file internal incident report and evaluate regulator notification requirements.

Map incident types to state privacy and EEO reporting obligations. Run a tabletop. Make the playbook a sprint artifact. Test the communications templates.

Conclusion — Action Plan and Next Step

Structure HR AI governance around prioritized risk, clear accountability, and ongoing monitoring.

This quarter: pick one high-impact system, run the 30‑day inventory, complete the 60‑day risk assessment, and implement 90‑day controls and monitoring. If you need senior compliance coverage, consider a fractional CCO to take ownership of policy, audits, and regulator engagement.

One concrete goal: reduce compliance review cycle for releases from 10 days to 3 days by embedding sign‑off into your sprint gates.

FAQs

Q: What is AI governance in HR and why does it matter?

A: AI governance in HR is the set of policies, roles, controls, and monitoring that ensure HR models are fair, legal, and auditable. Benefits: reduced legal exposure, fairer decisions, and faster, less risky launches.

Q: How do I start if my team has no data scientists?

A:

Start with inventory and policy drafting. Use open-source testing toolkits and engage fractional experts for technical validation.

Q: Which HR systems are highest risk?

A: Highest-risk systems: candidate screening tools, compensation algorithms, termination/discipline automation, and any model that uses sensitive PII.

Q: How often should we re-test models in production?

A:

Monthly for high-risk models, quarterly for medium-risk models, and after any material data or feature change.

Q: Can we rely solely on vendor attestations?

A:

No. Attestations are necessary but not sufficient. Require independent validation, contractual audit rights, and continuous monitoring.

Q: What does a fractional CCO do for HR AI governance?

A: A fractional CCO owns policy, regulator liaison, audit dossier prep, and oversight of risk assessments and controls. They integrate with HR, product, and engineering to make governance operational.