Agentic AI Needs Compliance Assistance

Agentic AI needs Compliance assistance — practical guide to map AI decisions to risk framework, set real-time monitoring, and secure audit-ready documentation in 30 days.

Introduction

Your AI system just triggered a $2 million compliance violation.

This scenario keeps CFOs awake at night as agentic AI systems make thousands of autonomous decisions daily. Traditional oversight breaks down when machines operate faster than human review cycles.

The math is stark: non-compliance fines exceed AI implementation savings by 300% in documented cases. What started as cost reduction becomes budget catastrophe.

The Compliance Gap in Agentic AI Implementation

Agentic AI scripts don't follow scripts. They analyze situations, weigh options, and execute actions independently to achieve programmed goals.

Your current compliance structure assumes human decision-makers who document reasoning and accept accountability. These autonomous systems operate in milliseconds, making thousands of micro-decisions that impact financial reporting and regulatory obligations.

The SEC, GDPR enforcement teams, and SOX auditors now target AI-driven financial decisions. Recent SEC roundtables spotlight governance gaps and fraud detection failures in autonomous systems.

Here's what triggers violations: AI makes customer-impacting decisions without documentation, executes trades beyond approved parameters, or processes personal data violating privacy regulations. Speed advantage becomes liability when violations compound faster than detection.

The guidance is clear: you cannot delegate compliance responsibility to AI systems or vendors. Legal accountability stays with your organization regardless of AI autonomy levels.

Real-Time Monitoring Challenges

Consider a manufacturing CFO whose AI purchasing system processes 2,000 vendor transactions per second while compliance verification runs on daily cycles.

This speed mismatch creates oversight gaps that regulators actively target. Standard logging captures inputs and outputs but misses reasoning pathways that privacy regulators require for enforcement defenses.

AI systems optimize for outcomes, not compliance narratives. You need translation layers that most organizations overlook during planning.

Think of AI compliance like air traffic control. Both require real-time monitoring of fast-moving systems where failure costs are catastrophic. Air traffic controllers can't review yesterday's flight paths to prevent today's collisions.

Manufacturing companies face unique risks: procurement AI making supplier decisions affecting conflict minerals reporting, pricing algorithms triggering antitrust concerns, or quality control systems creating product liability issues.

Vendor Accountability Issues

AI service providers shift compliance responsibility through contract disclaimers. They position platforms as tools while you bear full regulatory risk.

Hidden compliance costs appear through data residency fees, audit support charges, and premium pricing for regulatory-grade logging. Standard service tiers rarely include documentation depth that financial regulators demand.

Contract negotiations reveal the gap: vendors offer basic logging for $50,000 annually but charge $200,000 for audit-ready documentation. The price difference reflects compliance complexity that executives may underestimate.

Most AI vendors structure agreements to minimize their liability while maximizing your exposure, but legal teams may not discover these imbalances until the contract has been signed.

Step 1: Map AI Decisions to Regulatory Requirements

Connect each AI function to specific compliance mandates. Create matrices linking AI capabilities to SOX controls, SEC reporting requirements, and industry mandates.

Define decision boundaries by risk level. Route high-risk AI actions through human approval while routine operations proceed automatically. Transaction amounts, customer impact levels, and regulatory sensitivity determine routing rules.

Deploy audit-ready logging systems. Standard application logs miss contextual factors regulators need. Model monitoring platforms provide enterprise-grade tracing that maps AI decisions to business outcomes.

Set authority limits for different AI agents. Financial transaction AI requires stricter controls than customer service systems.

The NIST AI Risk Management system offers structured guidance for governance deployment. Their playbooks provide documentation standards and accountability structures aligned with federal expectations.

Regulatory Mapping Process

Start with your existing compliance calendar. Map AI decision points to quarterly reporting cycles, annual audits, and regulatory filing deadlines.

SOX compliance requires: documented controls for AI affecting financial reporting, segregation of duties in AI oversight, and testing procedures for automated controls.

SEC reporting demands: disclosure of AI risks in 10-K filings, explanation of AI impact on revenue recognition, and documentation of AI-driven trading activities.

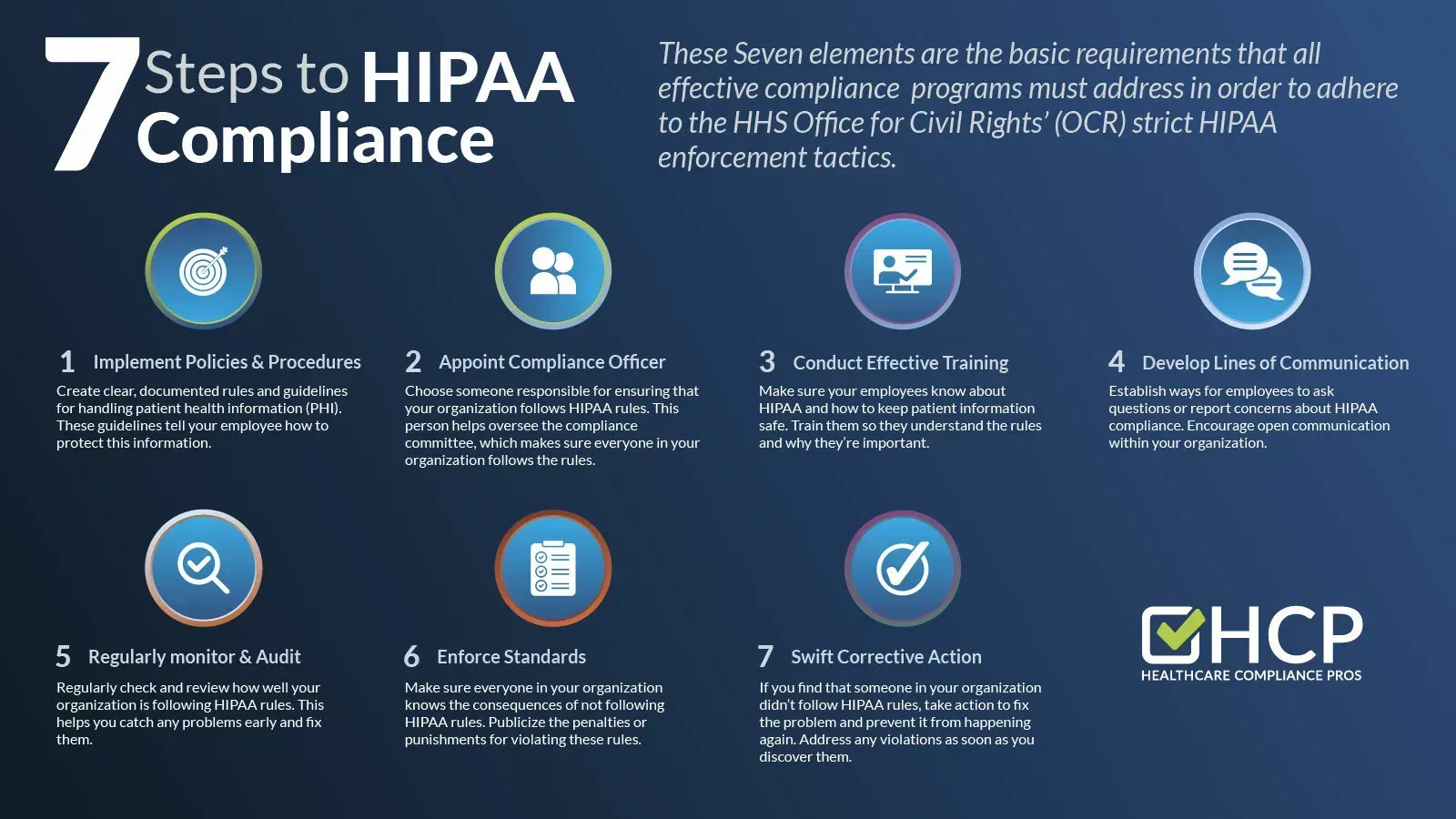

Industry-specific mandates vary: healthcare AI must meet HIPAA standards, financial services face additional OCC guidance, and manufacturers deal with product safety regulations.

Step 2: Monitor AI Compliance in Real-Time

Deploy continuous monitoring that flags potential violations as they occur. Automated monitoring systems track behavioral drift and anomalous decision patterns indicating compliance risks.

Configure alerts for AI behaviors that exceed approved parameters. Balance sensitivity with operational efficiency to avoid overwhelming your compliance team.

Build executive dashboards connecting AI performance to regulatory risk indicators. Your board needs clear compliance visibility without technical complexity.

Automated Documentation Systems

Set up systems generating audit-ready explanations of AI reasoning. Explainability tools like Fiddler create human-readable explanations of AI logic for compliance teams.

Create compliance reports translating technical AI actions into language auditors understand. You need interpretation layers connecting model outputs to business impact.

Build retention policies for AI documentation aligned with regulatory timelines. Data monitoring platforms automate retention management based on regulatory requirements.

Documentation must capture: decision inputs, processing logic, alternative options considered, confidence levels, and human override capabilities.

Exception Management Protocols

Design manual override systems for AI decisions triggering compliance concerns. Override capabilities must maintain audit trails documenting human intervention reasoning.

Create review processes for AI actions in regulatory gray areas. GDPR automated-decision rules require specific human intervention protocols.

Establish legal team communication when AI creates compliance risks. Early legal engagement prevents issues from becoming enforcement actions.

Step 3: Control AI Technology Expenses

Track AI spending across multiple vendor relationships to ensure accurate financial reporting. AI costs span cloud infrastructure, software licenses, professional services, and data acquisition.

FinOps practices provide allocation and forecasting guidance supporting board-level financial controls. Cost forecasting playbooks help anticipate variable AI expenses for budget planning.

Monitor hidden fees impacting compliance reporting accuracy. Overage charges and premium features accumulate quickly in production systems.

Typical AI cost surprises include: data transfer fees escalating from $5,000 to $50,000 monthly, compliance logging premiums adding 40% to base costs, and model retraining expenses spiking during regulatory changes.

The FinOps Foundation's expansion into AI cost governance reflects mainstream financial discipline expectations. Boards expect the same spending controls for AI as traditional investments.

AI Budget Planning

Quarterly AI expense reviews reveal patterns: training costs spike during model updates, inference costs grow with user adoption, and compliance costs increase during regulatory changes.

Build AI budgets with 25-30% contingency for compliance-driven expenses. Regulatory requirements often emerge mid-deployment, forcing expensive retrofits.

Track AI ROI against compliance costs to maintain board support. Demonstrate that compliance investments protect rather than diminish AI value.

Step 4: Reporting Requirements for AI Compliance

Your board needs AI compliance visibility without technical overwhelm. Create reporting structures connecting AI risks to business outcomes they understand.

Monthly board packages should include: AI decision volumes, compliance violations detected, regulatory communications received, and audit findings resolved.

Quarterly deep dives require: risk assessment updates, vendor performance reviews, compliance cost analysis, and regulatory landscape changes.

Structure AI risk reporting similar to cybersecurity briefings. Boards understand security risks and expect similar clarity for AI compliance.

Risk Communication Strategies

Translate technical AI risks into financial impact scenarios. "Model drift" becomes "revenue recognition errors affecting quarterly earnings."

Use familiar risk categories: operational risk for AI system failures, regulatory risk for compliance violations, and reputational risk for biased AI decisions.

Create AI risk heat maps showing probability and impact of different violation scenarios. Visual representations help boards prioritize attention and resources.

Present AI compliance as insurance rather than cost center. Frame compliance investments as protecting enterprise value from regulatory penalties.

Common AI Compliance Mistakes

Assuming vendors handle compliance automatically. A mid-market manufacturer discovered their AI vendor's "compliance-ready" platform lacked SOX-required audit trails, creating a $500,000 remediation project.

Deploying AI without updating compliance policies. Current regulatory evolution requires proactive policy updates, not reactive fixes.

Skipping compliance team AI training. Your staff need technical AI understanding to assess regulatory risks during enforcement inquiries. Compliance teams trained only on traditional processes miss AI-specific violations.

Ignoring data residency implications. GDPR Article 22 mandates transparency for automated decisions affecting individuals. Cloud-based AI often processes EU data in non-compliant jurisdictions.

Missing accountability chains. Compliance systems must specify human responsibility for AI outputs during enforcement actions. Regulatory agencies reject "the AI decided" as legal defense.

Prevention Strategies

Run compliance tabletop exercises simulating AI violations. Practice response procedures before facing real enforcement actions.

Audit AI vendors quarterly rather than relying on annual assessments. AI capabilities and compliance postures change rapidly.

Document AI decision logic in business terms, not technical specifications. Regulators need to understand why AI made specific choices.

FAQs

How quickly can AI trigger compliance violations?

Agentic AI systems trigger violations within milliseconds of deployment. Unlike humans who consider compliance implications, AI optimizes for programmed objectives without regulatory awareness. Trading algorithms exceed position limits, customer service AI violates privacy rules, or pricing systems create discriminatory outcomes in seconds.

What documentation do regulators expect from AI?

Regulators expect explainable decision trails connecting inputs, processing logic, and outputs in human-readable formats. Auditors demand the same reasoning transparency from AI as human decisions, including data sources, decision criteria, and alternatives considered.

Who's liable when AI violates regulations?

Your organization bears full legal liability regardless of vendor disclaimers or AI autonomy levels. Regulators hold companies accountable for all AI-made business decisions, treating AI as a tool rather than independent legal entity.

How do we balance AI speed with compliance oversight?

Apply risk-based governance with proportional oversight based on decision impact. High-risk decisions require human approval while routine operations proceed within pre-approved boundaries. Automated monitoring provides continuous oversight without slowing operations.

What compliance training do teams need?

Teams need training on AI-specific regulatory requirements, technical capabilities and limitations, documentation standards, escalation procedures, and legal accountability structures. Technical teams need compliance awareness while compliance teams need AI literacy.

How often should we audit AI compliance?

AI compliance requires continuous monitoring with formal audits quarterly for high-risk deployments and annually for lower-risk deployments. AI decision speed demands more frequent review cycles than traditional processes.

What are typical penalties for AI violations?

Penalties reach millions depending on regulatory body and violation severity. SEC enforcement actions average $2.8 million while GDPR fines reach 4% of global revenue. Privacy violations and discriminatory AI carry particularly severe consequences.

Conclusion

Proactive AI compliance planning prevents violations costing more than deployments save.

Build compliance architecture before deployment, not after enforcement actions.

Smart CFOs recognize compliance costs as insurance premiums protecting organizational reputation and financial performance. The investment in proper oversight delivers returns through avoided penalties and operational confidence.

Your next 30-day action plan: audit current AI vendor contracts for compliance gaps, train your compliance team on AI-specific risks, and establish board reporting metrics for AI governance oversight.