AI Compliance Checklist: Sprint-Ready Guide for Startups

AI Compliance Checklist for startups: a sprint-ready guide covering governance, data, model validation, consumer protections, and audit readiness to avoid launch delays.

Introduction — Why AI compliance matters now

Stop releases getting held.

Startups shipping AI features face real regulatory risk. The phrase “AI Compliance Checklist” should appear on your sprint board from day one to avoid fines, product holds, and reputation hits. In fintech, a misclassified model can pause a rollout or trigger CFPB and state inquiries that cost months. This guide uses a practical lifecycle approach—Govern, Data, Model, Consumer, Ops—to give step-by-step, sprint-ready tasks you can map into Jira.

Treat this as a sprint playbook. Do one thing today: add “AI Compliance” as a required review in your template.

How to use this checklist effectively

Treat this checklist as a sprint playbook and assign one owner per item. Map each item to a Jira ticket or epic, set a review SLA, and track closure in your release notes.

Do this now: add “AI Compliance” as a required review in your template. That creates a hard product gate.

Review cadence: pre-launch gate, 30-day post-release review, and quarterly risk reviews. Store artifacts in a version-controlled repo (Notion, Confluence, or GitHub). Tag model versions and datasets with immutable IDs so you can reproduce results. For operational templates and crosswalks, consult NIST AI RMF Playbook and implementation resources.

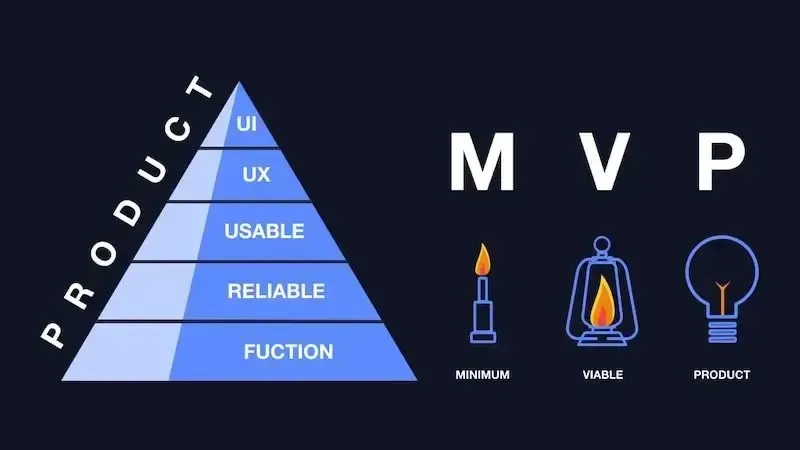

Prioritize by risk tier. MVP = lightweight guards (data inventory, basic model card, consumer notices). Scale release = full validation, vendor audits, and examiner-ready evidence packs. Link checklist items to internal policies and the external references below for examiner credibility.

Sprint steps for the first week:

- Create an “AI Owner” field on product specs.

- Run a high-level data inventory for the model in scope.

- Add a validation ticket for your highest-risk model.

These three steps buy you immediate runway.

Governance and risk ownership checklist

Assign a single accountable owner for AI risk. Make that person the formal contact for regulators and the approver for model release. Do this immediately: add “AI Owner” as a field on every product spec. Create a visible RACI that covers Legal, Product, Engineering, Security, and Ops. Publish it on the product board and require sign-off for any feature touching model logic. Keep the RACI to one page and a single table for quick reference.

Document an AI governance charter that states scope, risk appetite, decision authority, and escalation paths. Keep the charter short—one page plus a one-line summary attached to specs. Align the charter with NIST principles, so your audit narrative maps to recognized functions.

Set measurable KPIs:

- Review SLA (e.g., legal signoff in 72 hours).

- Open-issue aging (max 30 days).

- Number of untriaged consumer complaints.

- Mean time to remediate high-risk defects.

Maintain a decision log with timestamps, rationale, and sign-offs for material choices. This is exam gold, as regulators want to see why you accepted a risk.

A short, concrete example: a fintech paused a loan-product launch for two months because no one owned licensing decisions. Assigning an owner cut that drag time to days in later launches.

Do this sprint ticket: publish the one-page RACI and assign the AI Owner. Expect pushback—push back politely and set the SLA.

Data governance and privacy checklist

Inventory everything used for training, validation, and inference. Tag fields as PII, sensitive, derived, or synthetic. Keep a living data map that links to dataset snapshots.

Minimize data collection. If a field doesn’t improve model performance or meet compliance requirements, don’t ingest it. Set retention rules tied to business need and applicable state laws.

Secure training and inference environments. Use role-based access, encryption at rest and transit, and immutable logs for dataset access. Log who accessed raw data and when. The examiner will ask.

Align handling with sector rules: GLBA for financial data, state privacy statutes like CPRA analogs, and cross-border constraints. Run Privacy Impact Assessments (PIAs) and document them. Use the PIA templates and guidance from IAPP.

Validate continuously. Schedule PIAs and threat-modeling sessions, and convert findings into remediation tickets. For external datasets, require signed provenance and licensing paperwork before ingestion.

Quick micro-checklist (Data):

- Data map linked to dataset snapshots.

- Field tags for PII/sensitive/derived.

- Retention policy tied to product need.

- Access logs and encryption.

- PIA completed and published internally.

Example: For a payments-risk model, tag account number and transaction amount as PII/sensitive. Snapshot the dataset used for training and store the hash in your repo. That single action answers the first question an examiner will ask.

Model development and validation checklist

Require a model card for every production model. The model card must state purpose, intended users, limitations, and evaluation metrics. Use TensorFlow model-card best practices to standardize format.

Design validation plans that test performance, fairness, drift, and resilience before deployment. Tier tests by risk: high-risk models (credit decisions, fraud scoring) need statistical tests, subgroup analysis, and human review. Lower-risk models need a lighter set.

Institute versioning and reproducible pipelines. Snapshot datasets, seed values, hyperparameters, and code. Keep a retraining ledger that ties model versions to deployment timestamps. Gate deployments with pre-production checklists and signoffs from Product, Engineering, and Compliance. Log inputs and outputs to support explainability and to answer “why” requests.

Use explainability tools to produce evidence. SHAP explainability tool and LIME examples are useful for local and global explanations; include exportable outputs in your evidence pack.

For fairness testing and mitigation, use toolkits like IBM AIF360 and Fairlearn guidance. These help you quantify group disparities and produce mitigation artifacts.

Example checklist (Model):

- Model card in repo.

- Validation plan executed & signed.

- Version snapshot and dataset hash stored.

- Explainability outputs attached.

- Deployment signoff stamped.

Mini-example: During validation for a scoring model, SHAP highlighted a subgroup with higher false negatives. The team added a targeted fairness mitigation and re-ran tests. The mitigation reduced disparity and produced a clear test artifact to include in the exam pack.

Do this in your sprint: require the model card and validation ticket to close before deployment.

Consumer protections and transparency checklist

If AI materially affects a user, provide a plain-language disclosure. Use short, clear notices that explain what the model does and how to appeal decisions. For adverse action like credit denials, map your notices to ECOA/FCRA requirements. CFPB guidance on credit denials and AI requires specific reasons for denials even when AI is used.

Offer human review and opt-out for high-risk actions. Track opt-outs and human-review outcomes. Make the remediation route fast and trackable. Monitor consumer complaints and route them directly to the model triage owner. Map complaint categories to model behavior and set thresholds that trigger emergency reviews.

Follow FTC advice on marketing claims and transparency. Avoid language that overstates model capabilities.

Quick consumer checklist:

- Disclosure template ready.

- Human-review flow documented.

- Complaint routing and SLA.

- Remediation playbook drafted.

Practical note: a short disclosure that clearly states why a decision was made reduces complaints. Keep the language plain and provide a clear appeal path.

Operational resilience and monitoring checklist

Define real-time monitoring metrics: accuracy, latency, error/failure rate, and fairness indicators. Automate alert thresholds and runbooks for triage. Detect drift early and schedule retraining. Automate drift checks and require manual signoff for model changes that alter user outcomes.

Create incident response plans covering model failure, data breach, and regulator notification timelines. Include a clear escalation path to the CCO and General Counsel.

Maintain fallbacks: human-in-the-loop gates, rule-based overrides, and tested rollback procedures. Practice them until each team can revert to a safe state within defined SLAs.

Use uncertainty quantification tools to show model limits when presenting to examiners. IBM’s UQ360 uncertainty toolkit helps quantify prediction confidence and supports regulator conversations.

Run chaos drills for corrupted inputs, model outages, and adversarial examples. Document lessons and close remediation tickets.

Short resilience playbook:

- Monitor baseline metrics.

- Alarm on drift.

- Triage and human review.

- Rollback if necessary.

Do one thing this sprint: automate a drift check and wire its alert to Slack plus the decision log.

Legal, licensing, and vendor controls checklist

Map whether your AI product triggers state licensing (money transmission, lending, escrow). Build a 50-state filing schedule with timelines for common fintech activities. Prepare standard submission materials: policies, senior-decision attestations, process diagrams, and test scripts. Centralize filings and calendar renewals so nothing slips. If filings are blocking launches, consider short-term compliance help to build your 50-state filing schedule and manage submissions.

Flag federal regulators that may apply (FTC, CFPB, SEC, OCC, EU AI Act) and map their guidance to your controls. Use the FTC AI Compliance Hub for current enforcement trends.

Vet vendors for data provenance and audit rights. Add contractual requirements: dataset provenance, audit access, SLAs for model updates, indemnities, and data-return clauses. Require SOC reports and periodic compliance evidence from vendors.

Step-by-step licensing starter:

- Identify trigger activities by state.

- Collect required documents.

- Publish a filing schedule with owners.

- Monitor renewals and centralize records.

Practical tip: store submission templates and proof-of-filing receipts in one shared folder and link them to the filing schedule. That one habit saves weeks during state reviews.

Audit readiness and testing checklist

Design test plans aligned to model risk tiers covering performance, fairness, security, and privacy. Automate routine tests and store outputs with timestamps. Turn test outputs into concise compliance reports showing KPIs, drift metrics, mitigation actions, and signoffs. These reports are the core evidence during exams. If you want a quick outside sanity check before an exam or investor call, a brief readiness review can reveal gaps fast.

Assemble an examiner-friendly evidence pack: policies, model cards, decision logs, test results, vendor certs, and incident playbooks. Use model-card examples as your evidence-pack template.

Practice mock exams with spokespeople. Record answers and improve briefing materials. Archive evidence with indexing standards for fast retrieval.

Exam pack checklist:

- Policies and model cards.

- Decision logs and test outputs.

- Vendor evidence and SOC reports.

- Incident playbooks and PIA summaries.

Do this: run a 1-day mock exam and log the gaps as Jira tickets. Then fix the top three before your next investor update.

Conclusion — Next steps and CTA

Do three things now: assign an accountable owner, run a full data inventory, and schedule validation for your highest-risk model. These steps remove most launch delays and reduce examiner exposure.

Make compliance a sprint requirement. Add the checklist items as mandatory PR gates and run your first mock exam within 60 days. If you do this, compliance becomes a predictable gate in your release process—not an emergency.

FAQs

What is an AI Compliance Checklist? A practical list of governance, data, model, consumer, operations, and legal controls to reduce risk when shipping AI.

When should a startup start one? Start at concept for any decision-affecting model, and formalize before the first public release.

How to prioritize for MVP vs. scale? MVP: data inventory, basic model card, opt-out for high-risk actions. Scale: full validation, vendor audits, and examiner-ready evidence.

What documentation do regulators expect? Policies, model cards, test outputs, decision logs, vendor evidence, incident playbooks, and PIA summaries.

What signals require immediate action? Spikes in complaints, sharp performance drops, distributional drift, or signs of discriminatory outcomes.

Ballpark costs for tooling vs. advisory? Open-source toolkits reduce tooling costs but expect advisory and filing costs for multistate compliance; budget based on scope and filing volume.

Short, practical final note: assign an owner today, run the data inventory this week, and schedule a validation sprint for your highest-risk model. Do that and you’ll avoid the most common examiner headaches.